Gradient Descent and Cost Function

How does Gradient Descent and Cost Function work in ML?

In ML all we have to find minimum weights.

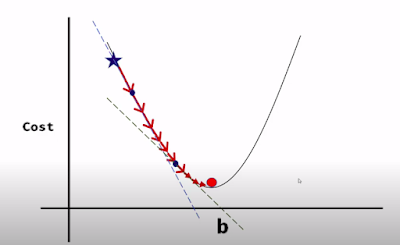

In above image you can see that we find global minima but how we can find it. for this we take small step which is learning rate.you find the tangent at every point and you find the next point. you can see in image --->--> become small and small . Now question is how we know that what learning rate we need? basically it's trial and error method so you try different learning rate and you will find global minima.

If your learning rate is very big then it may be miss the global minima.

Our linear regression line equation is : y = mx + b

where m and B is

So how you find the step size is given in below image

Gradient Descent and Cost Function in python :

First we import the library

OUTPUT :

If you want to visualise this output then its look like :

nice article

ReplyDelete